2D Cylinder Flow

This notebook requires MindSpore version >= 2.0.0 to support new APIs including: mindspore.jit, mindspore.jit_class, mindspore.jacrev.

Overview

Flow past cylinder problem is a two-dimensional low velocity steady flow around a cylinder which is only related to the Re number. When Re is less than or equal to 1, the inertial force in the flow field is secondary to the viscous force, the streamlines in the upstream and downstream directions of the cylinder are symmetrical, and the drag coefficient is approximately inversely proportional to Re . The flow around this Re number range is called the Stokes zone; With the increase

of Re , the streamlines in the upstream and downstream of the cylinder gradually lose symmetry. This special phenomenon reflects the peculiar nature of the interaction between the fluid and the surface of the body. Solving flow past a cylinder is a classical problem in hydromechanics.

Since it is difficult to obtain the generalized theoretical solution of the Navier-Stokes equation,the numerical method is used to solve the governing equation in the flow past cylinder scenario to predict the flow field, which is also a classical problem in computational fluid mechanics. Traditional solutions often require fine discretization of the fluid to capture the phenomena that need to be modeled. Therefore, traditional finite element method (FEM) and finite difference method (FDM) are often costly.

Physics-informed Neural Networks (PINNs) provides a new method for quickly solving complex fluid problems by using loss functions that approximate governing equations coupled with simple network configurations. In this case, the data-driven characteristic of neural network is used along with PINNs to solve the flow past cylinder problem.

Problem Description

The Navier-Stokes equation, referred to as N-S equation, is a classical partial differential equation in the field of fluid mechanics. In the case of viscous incompressibility, the dimensionless N-S equation has the following form:

where Re stands for Reynolds number.

In this case, the PINNs method is used to learn the mapping from the location and time to flow field quantities to solve the N-S equation.

Technology Path

MindFlow solves the problem as follows:

Training Dataset Construction.

Model Construction.

Multi-task Learning for Adaptive Losses

Optimizer.

NavierStokes2D.

Model Training.

Model Evaluation and Visualization.

[1]:

import time

import numpy as np

import mindspore

from mindspore import context, nn, ops, Tensor, jit, set_seed, load_checkpoint, load_param_into_net

from mindspore import dtype as mstype

The following src pacakage can be downloaded in applications/physics_driven/flow_past_cylinder/src.

[2]:

from mindflow.cell import MultiScaleFCCell

from mindflow.loss import MTLWeightedLossCell

from mindflow.pde import NavierStokes, sympy_to_mindspore

from mindflow.utils import load_yaml_config

from src import create_training_dataset, create_test_dataset, calculate_l2_error

set_seed(123456)

np.random.seed(123456)

[3]:

# set context for training: using graph mode for high performance training with GPU acceleration

config = load_yaml_config('cylinder_flow.yaml')

context.set_context(mode=context.GRAPH_MODE, device_target="GPU", device_id=3)

use_ascend = context.get_context(attr_key='device_target') == "Ascend"

Training Dataset Construction

In this case, the initial condition and boundary condition data of the existing flow around a cylinder with Reynolds number 100 are sampled. For the training dataset, the problem domain and time dimension of planar rectangle are constructed. Then the known initial conditions and boundary conditions are sampled. The test dataset is constructed based on the existing points in the flow field.

Download the training and test dataset: physics_driven/flow_past_cylinder/dataset .

[4]:

# create training dataset

cylinder_flow_train_dataset = create_training_dataset(config)

cylinder_dataset = cylinder_flow_train_dataset.create_dataset(batch_size=config["train_batch_size"],

shuffle=True,

prebatched_data=True,

drop_remainder=True)

# create test dataset

inputs, label = create_test_dataset(config["test_data_path"])

./flow_past_cylinder/dataset

get dataset path: ./flow_past_cylinder/dataset

check eval dataset length: (36, 100, 50, 3)

Model Construction

This example uses a simple fully-connected network with a depth of 6 layers and the activation function is the tanh function.

[5]:

coord_min = np.array(config["geometry"]["coord_min"] + [config["geometry"]["time_min"]]).astype(np.float32)

coord_max = np.array(config["geometry"]["coord_max"] + [config["geometry"]["time_max"]]).astype(np.float32)

input_center = list(0.5 * (coord_max + coord_min))

input_scale = list(2.0 / (coord_max - coord_min))

model = MultiScaleFCCell(in_channels=config["model"]["in_channels"],

out_channels=config["model"]["out_channels"],

layers=config["model"]["layers"],

neurons=config["model"]["neurons"],

residual=config["model"]["residual"],

act='tanh',

num_scales=1,

input_scale=input_scale,

input_center=input_center)

Multi-task learning for adaptive losses

The PINNs method needs to optimize multiple losses at the same time, and brings challenges to the optimization process. Here, we adopt the uncertainty weighting algorithm proposed in Kendall, Alex, Yarin Gal, and Roberto Cipolla. “Multi-task learning using uncertainty to weigh losses for scene geometry and semantics.” CVPR, 2018. to dynamically adjust the weights.

[6]:

mtl = MTLWeightedLossCell(num_losses=cylinder_flow_train_dataset.num_dataset)

Optimizer

[7]:

if config["load_ckpt"]:

param_dict = load_checkpoint(config["load_ckpt_path"])

load_param_into_net(model, param_dict)

load_param_into_net(mtl, param_dict)

# define optimizer

params = model.trainable_params() + mtl.trainable_params()

optimizer = nn.Adam(params, config["optimizer"]["initial_lr"])

Model Training

With MindSpore version >= 2.0.0, we can use the functional programming for training neural networks.

[9]:

def train():

problem = NavierStokes2D(model)

from mindspore.amp import DynamicLossScaler, auto_mixed_precision, all_finite

if use_ascend:

loss_scaler = DynamicLossScaler(1024, 2, 100)

auto_mixed_precision(model, 'O3')

else:

loss_scaler = None

# the loss function receives 5 data sources: pde, ic, ic_label, bc and bc_label

def forward_fn(pde_data, ic_data, ic_label, bc_data, bc_label):

loss = problem.get_loss(pde_data, ic_data, ic_label, bc_data, bc_label)

if use_ascend:

loss = loss_scaler.scale(loss)

return loss

grad_fn = ops.value_and_grad(forward_fn, None, optimizer.parameters, has_aux=False)

# using jit function to accelerate training process

@jit

def train_step(pde_data, ic_data, ic_label, bc_data, bc_label):

loss, grads = grad_fn(pde_data, ic_data, ic_label, bc_data, bc_label)

if use_ascend:

loss = loss_scaler.unscale(loss)

if all_finite(grads):

grads = loss_scaler.unscale(grads)

loss = ops.depend(loss, optimizer(grads))

return loss

steps = config["train_steps"]

sink_process = mindspore.data_sink(train_step, cylinder_dataset, sink_size=1)

model.set_train()

for step in range(steps + 1):

local_time_beg = time.time()

cur_loss = sink_process()

if step % 100 == 0:

print(f"loss: {cur_loss.asnumpy():>7f}")

print("step: {}, time elapsed: {}ms".format(step, (time.time() - local_time_beg)*1000))

calculate_l2_error(model, inputs, label, config)

[10]:

time_beg = time.time()

train()

print("End-to-End total time: {} s".format(time.time() - time_beg))

momentum_x: u(x, y, t)*Derivative(u(x, y, t), x) + v(x, y, t)*Derivative(u(x, y, t), y) + Derivative(p(x, y, t), x) + Derivative(u(x, y, t), t) - 0.00999999977648258*Derivative(u(x, y, t), (x, 2)) - 0.00999999977648258*Derivative(u(x, y, t), (y, 2))

Item numbers of current derivative formula nodes: 6

momentum_y: u(x, y, t)*Derivative(v(x, y, t), x) + v(x, y, t)*Derivative(v(x, y, t), y) + Derivative(p(x, y, t), y) + Derivative(v(x, y, t), t) - 0.00999999977648258*Derivative(v(x, y, t), (x, 2)) - 0.00999999977648258*Derivative(v(x, y, t), (y, 2))

Item numbers of current derivative formula nodes: 6

continuty: Derivative(u(x, y, t), x) + Derivative(v(x, y, t), y)

Item numbers of current derivative formula nodes: 2

ic_u: u(x, y, t)

Item numbers of current derivative formula nodes: 1

ic_v: v(x, y, t)

Item numbers of current derivative formula nodes: 1

bc_u: u(x, y, t)

Item numbers of current derivative formula nodes: 1

bc_v: v(x, y, t)

Item numbers of current derivative formula nodes: 1

bc_p: p(x, y, t)

Item numbers of current derivative formula nodes: 1

loss: 0.762854

step: 0, time elapsed: 8018.740892410278ms

predict total time: 348.56629371643066 ms

l2_error, U: 0.929843072812499 , V: 1.0664025634627166 , P: 1.2470654215761847 , Total: 0.9487255679809018

==================================================================================================

loss: 0.099878

step: 100, time elapsed: 364.26734924316406ms

predict total time: 26.329755783081055 ms

l2_error, U: 0.32336308802455266 , V: 0.9999815366016505 , P: 0.7799935842779347 , Total: 0.43305926535738415

==================================================================================================

loss: 0.099729

step: 200, time elapsed: 365.6139373779297ms

predict total time: 31.240463256835938 ms

l2_error, U: 0.3234011910681541 , V: 1.0001619978094591 , P: 0.7813910733696886 , Total: 0.4331668769990714

==================================================================================================

loss: 0.093919

step: 300, time elapsed: 367.8765296936035ms

predict total time: 25.576353073120117 ms

l2_error, U: 0.30047520000238387 , V: 1.000794648538466 , P: 0.79058399174337 , Total: 0.4185132401858534

==================================================================================================

loss: 0.054179

step: 400, time elapsed: 364.1970157623291ms

predict total time: 29.039621353149414 ms

l2_error, U: 0.20330252312566804 , V: 0.9996817093977537 , P: 0.7340101922590306 , Total: 0.359612937152551

==================================================================================================

...

==================================================================================================

loss: 0.000437

step: 11700, time elapsed: 369.844913482666ms

predict total time: 98.89841079711914 ms

l2_error, U: 0.05508347849471555 , V: 0.21316098417551024 , P: 0.2859434784168006 , Total: 0.08947950657758963

==================================================================================================

loss: 0.000499

step: 11800, time elapsed: 380.2335262298584ms

predict total time: 108.20960998535156 ms

l2_error, U: 0.054145983320779696 , V: 0.21175303254015415 , P: 0.28978443844341173 , Total: 0.08892416803453118

==================================================================================================

loss: 0.000634

step: 11900, time elapsed: 396.8188762664795ms

predict total time: 98.45995903015137 ms

l2_error, U: 0.05270965792134252 , V: 0.2132374431010393 , P: 0.29610047782600435 , Total: 0.08883453152457486

==================================================================================================

loss: 0.000495

step: 12000, time elapsed: 373.43478202819824ms

predict total time: 89.78271484375 ms

l2_error, U: 0.05282220780283167 , V: 0.20572236320159534 , P: 0.28065431462629 , Total: 0.0864571051248727

==================================================================================================

End-to-End total time: 4497.61700296402 s

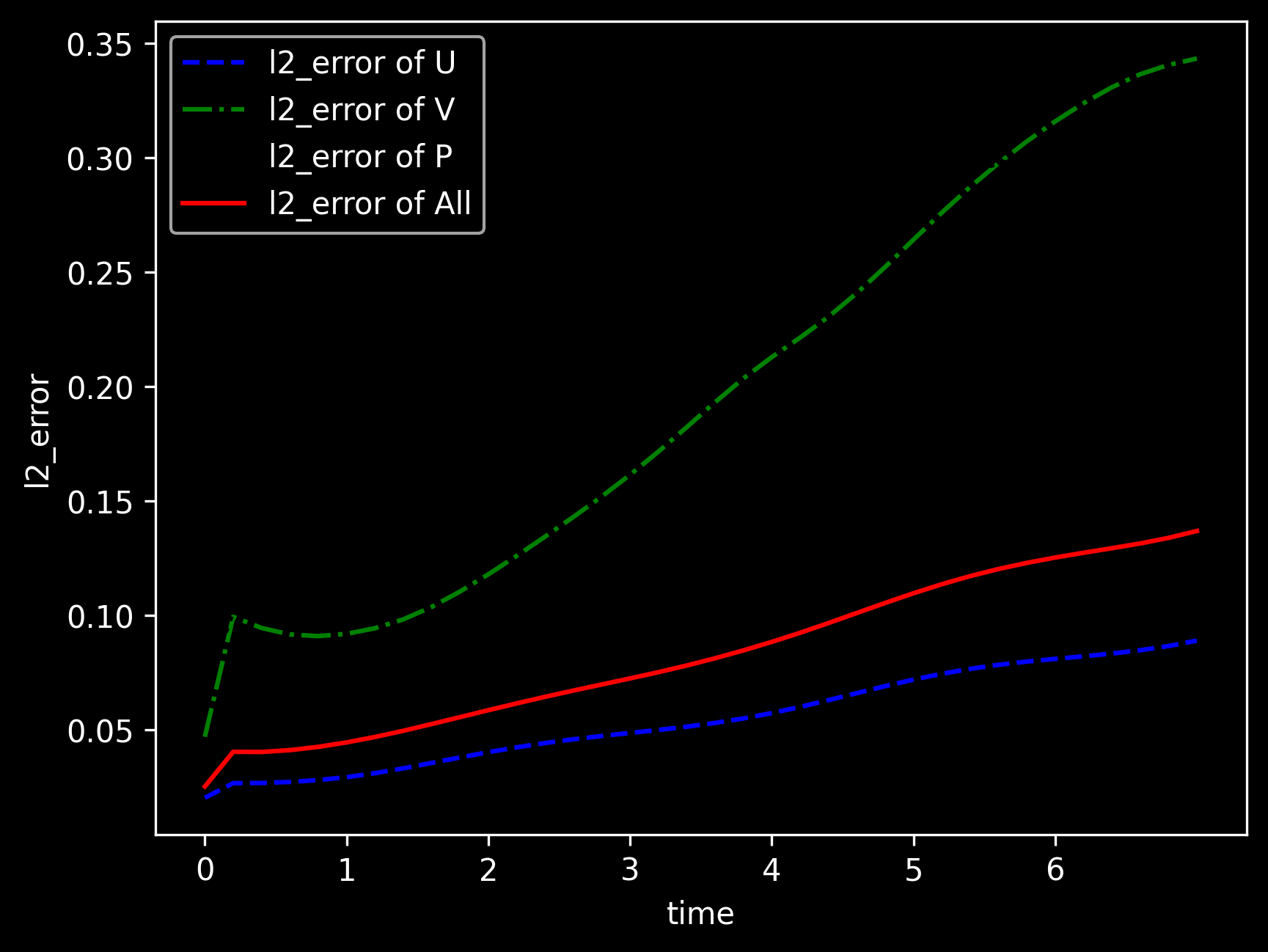

Model Evaluation and Visualization

After training, all data points in the flow field can be inferred. And related results can be visualized.

[11]:

from src import visualization

# visualization

visualization(model=model, step=config["train_steps"], input_data=inputs, label=label)