mindspore.mint.nn.functional.leaky_relu

- mindspore.mint.nn.functional.leaky_relu(input, negative_slope=0.01)[source]

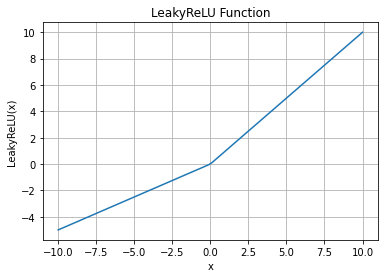

leaky_relu activation function. The element of input less than 0 times negative_slope .

The activation function is defined as:

\[\text{leaky_relu}(input) = \begin{cases}input, &\text{if } input \geq 0; \cr \text{negative_slope} * input, &\text{otherwise.}\end{cases}\]where \(negative\_slope\) represents the negative_slope parameter.

For more details, see Rectifier Nonlinearities Improve Neural Network Acoustic Models.

LeakyReLU Activation Function Graph:

- Parameters

- Returns

Tensor, has the same type and shape as the input.

- Raises

- Supported Platforms:

Ascend

Examples

>>> import mindspore >>> import numpy as np >>> from mindspore import Tensor, mint >>> input = Tensor(np.array([[-1.0, 4.0, -8.0], [2.0, -5.0, 9.0]]), mindspore.float32) >>> print(mint.nn.functional.leaky_relu(input, negative_slope=0.2)) [[-0.2 4. -1.6] [ 2. -1. 9. ]]