mindspore.ops.hardswish

- mindspore.ops.hardswish(x)[source]

Applies hswish-type activation element-wise. The input is a Tensor with any valid shape.

Hard swish is defined as:

\[\text{hswish}(x_{i}) = x_{i} * \frac{ReLU6(x_{i} + 3)}{6}\]where \(x_i\) is an element of the input Tensor.

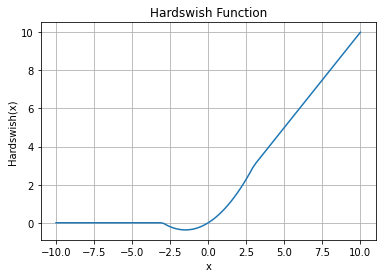

HSwish Activation Function Graph:

- Parameters

x (Tensor) – The input to compute the Hard Swish.

- Returns

Tensor, has the same data type and shape as the input.

- Raises

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> import numpy as np >>> from mindspore import Tensor, ops >>> x = Tensor(np.array([-1, -2, 0, 2, 1]), mindspore.float16) >>> output = ops.hardswish(x) >>> print(output) [-0.3333 -0.3333 0 1.666 0.6665]