mindspore.ops.elu

- mindspore.ops.elu(input_x, alpha=1.0)[source]

Exponential Linear Unit activation function.

Applies the exponential linear unit function element-wise. The activation function is defined as:

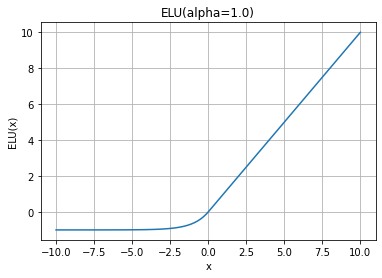

\[\begin{split}\text{ELU}(x)= \left\{ \begin{array}{align} \alpha(e^{x} - 1) & \text{if } x \le 0\\ x & \text{if } x \gt 0\\ \end{array}\right.\end{split}\]Where \(x\) is the element of input Tensor input_x, \(\alpha\) is param alpha, it determines the smoothness of ELU. The picture about ELU looks like this ELU .

ELU function graph:

- Parameters

- Returns

Tensor, has the same shape and data type as input_x.

- Raises

TypeError – If alpha is not a float.

TypeError – If dtype of input_x is neither float16 nor float32.

ValueError – If alpha is not equal to 1.0.

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> import numpy as np >>> from mindspore import Tensor, ops >>> x = Tensor(np.array([[-1.0, 4.0, -8.0], [2.0, -5.0, 9.0]]), mindspore.float32) >>> output = ops.elu(x) >>> print(output) [[-0.63212055 4. -0.99966455] [ 2. -0.99326205 9. ]]