mindspore.nn.PReLU

- class mindspore.nn.PReLU(channel=1, w=0.25)[source]

PReLU activation function.

Applies the PReLU function element-wise.

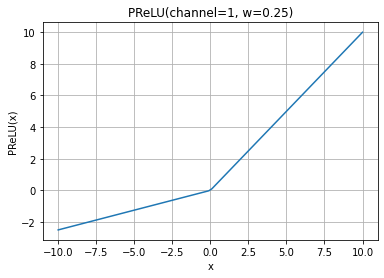

PReLU is defined as:

\[PReLU(x_i)= \max(0, x_i) + w * \min(0, x_i),\]where \(x_i\) is an element of an channel of the input.

Here \(w\) is a learnable parameter with a default initial value 0.25. Parameter \(w\) has dimensionality of the argument channel. If called without argument channel, a single parameter \(w\) will be shared across all channels.

PReLU Activation Function Graph:

- Parameters

channel (int) – The elements number of parameter \(w\). It could be an int, and the value is 1 or the channels number of input tensor x. Default:

1.w (Union[float, list, Tensor]) – The initial value of parameter. It could be a float, a float list or a tensor has the same dtype as the input tensor x. Default:

0.25.

- Inputs:

x (Tensor) - The input of PReLU with data type of float16 or float32. The shape is \((N, *)\) where \(*\) means, any number of additional dimensions.

- Outputs:

Tensor, with the same dtype and shape as the x.

- Raises

TypeError – If channel is not an int.

TypeError – If w is not one of a float, a float list, a float Tensor.

TypeError – If dtype of x is neither float16 nor float32.

ValueError – If the x is a 0-D or 1-D Tensor on Ascend.

ValueError – If channel is less than 1.

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> from mindspore import Tensor, nn >>> import numpy as np >>> x = Tensor(np.array([[[[0.1, 0.6], [0.9, 0.9]]]]), mindspore.float32) >>> prelu = nn.PReLU() >>> output = prelu(x) >>> print(output) [[[[0.1 0.6] [0.9 0.9]]]]