mindspore.mint.nn.functional.prelu

- mindspore.mint.nn.functional.prelu(input, weight)[source]

Parametric Rectified Linear Unit activation function.

PReLU is described in the paper Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. Defined as follows:

\[prelu(x_i)= \max(0, x_i) + \min(0, w * x_i),\]where \(x_i\) is an element of a channel of the input, w is the weight of the channel.

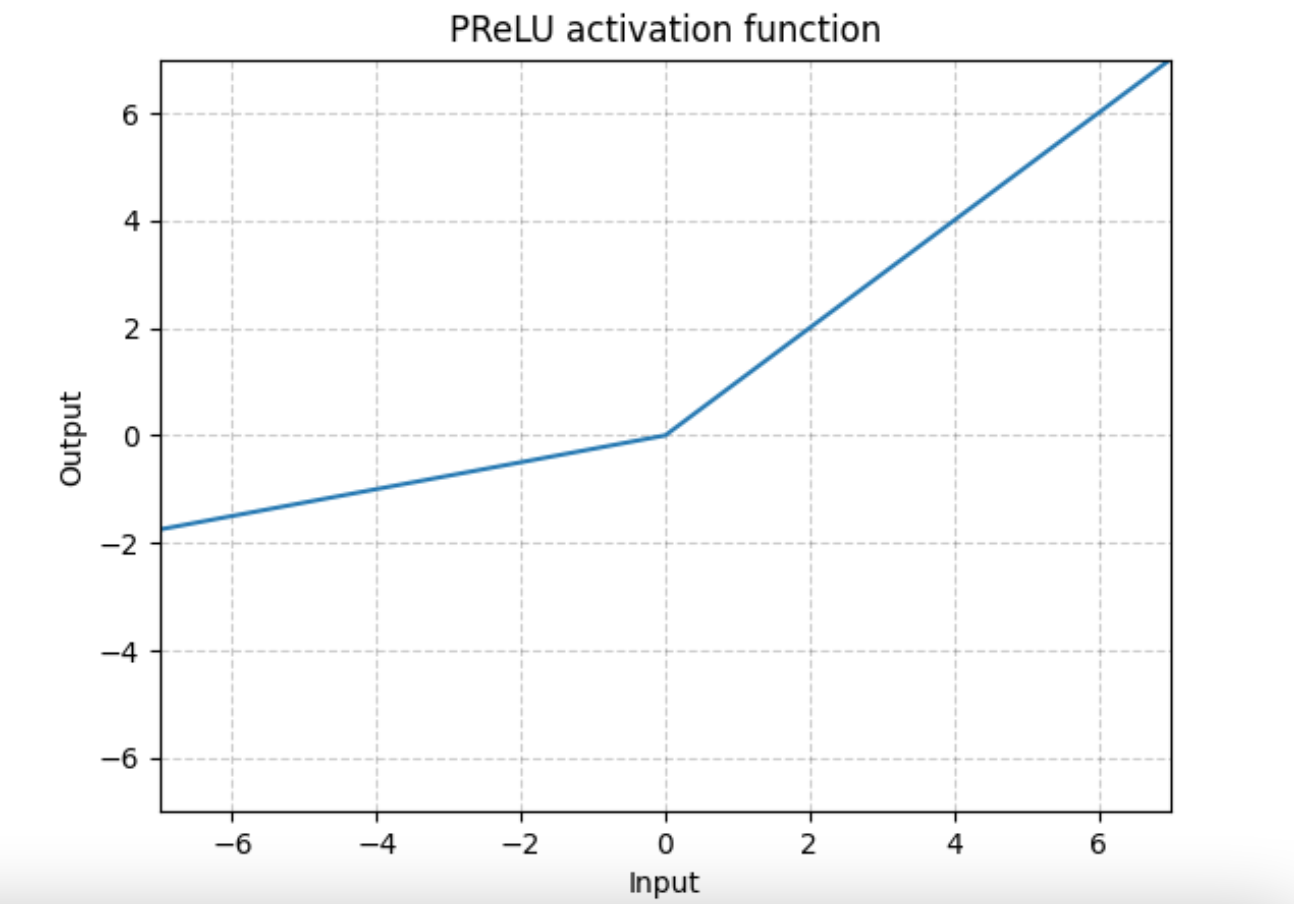

PReLU Activation Function Graph:

Note

Channel dim is the 2nd dim of input. When input has dims < 2, then there is no channel dim and the number of channels = 1.

- Parameters

- Returns

Tensor, with the same shape and dtype as input. For detailed information, please refer to

mindspore.mint.nn.PReLU.- Raises

TypeError – If the input or the weight is not a Tensor.

ValueError – If the weight is not a 0-D or 1-D Tensor.

- Supported Platforms:

Ascend

Examples

>>> import mindspore >>> import numpy as np >>> from mindspore import Tensor, mint >>> x = Tensor(np.arange(-6, 6).reshape((2, 3, 2)), mindspore.float32) >>> weight = Tensor(np.array([0.1, 0.6, -0.3]), mindspore.float32) >>> output = mint.nn.functional.prelu(x, weight) >>> print(output) [[[-0.60 -0.50] [-2.40 -1.80] [ 0.60 0.30]] [[ 0.00 1.00] [ 2.00 3.00] [ 4.0 5.00]]]