mindspore.ops.hardtanh

- mindspore.ops.hardtanh(input, min_val=- 1.0, max_val=1.0)[source]

Applies the hardtanh activation function element-wise. The activation function is defined as:

\[\begin{split}\text{hardtanh}(input) = \begin{cases} max\_val, & \text{ if } input > max\_val \\ min\_val, & \text{ if } input < min\_val \\ input, & \text{ otherwise. } \end{cases}\end{split}\]Linear region range \([min\_val, max\_val]\) can be adjusted using min_val and max_val.

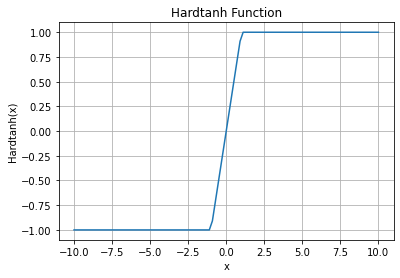

Hardtanh Activation Function Graph:

- Parameters

- Returns

Tensor, with the same dtype and shape as input.

- Raises

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> from mindspore import Tensor, ops >>> x = Tensor([-1, -2, 0, 2, 1], mindspore.float16) >>> output = ops.hardtanh(x, min_val=-1.0, max_val=1.0) >>> print(output) [-1. -1. 0. 1. 1.]