mindspore.ops.hardsigmoid

- mindspore.ops.hardsigmoid(input)[source]

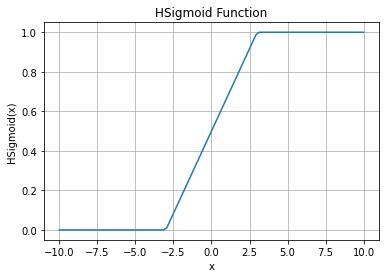

Hard sigmoid activation function.

Applies hard sigmoid activation element-wise. The input is a Tensor with any valid shape.

Hard sigmoid is defined as:

\[\text{hsigmoid}(x_{i}) = \max(0, \min(1, \frac{x_{i} + 3}{6}))\]where \(x_i\) is an element of the input Tensor.

HSigmoid Activation Function Graph:

- Parameters

input (Tensor) – The input Tensor.

- Returns

A Tensor whose dtype and shape are the same as input.

- Raises

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> import numpy as np >>> from mindspore import Tensor, ops >>> x = Tensor(np.array([ -3.5, 0, 4.3]), mindspore.float32) >>> output = ops.hardsigmoid(x) >>> print(output) [0. 0.5 1. ]