Performance Profiling (Ascend-PyNative)

Overview

This article describes how to use MindSpore Profiler for performance debugging on Ascend AI processors. PyNative mode currently supports three functions: Operator Performance Analysis, Timeline Analysis, Data Preparation Performance Analysis.

Operation Process

Prepare a training script, add profiler APIs in the training script and run the training script.

Start MindInsight and specify the summary-base-dir using startup parameters, note that summary-base-dir is the parent directory of the directory created by Profiler. For example, the directory created by Profiler is

/home/user/code/data/, the summary-base-dir should be/home/user/code. After MindInsight is started, access the visualization page based on the IP address and port number. The default access IP address ishttp://127.0.0.1:8080.Find the training in the list, click the performance profiling link and view the data on the web page.

Preparing the Training Script

To enable the performance profiling of neural networks, MindSpore Profiler APIs should be added into the script.

Before training starts, the MindSpore

Profilerobject needs to be initialized.

Note

The parameters of Profiler are as follows: https://www.mindspore.cn/docs/en/r1.8/api_python/mindspore/mindspore.Profiler.html#mindspore.Profiler

At the end of the training,

Profiler.analyse()should be called to finish profiling and generate the perforamnce analyse results.

The code for a normal scenario is as follows:

import numpy as np

import mindspore as ms

from mindspore import nn

import mindspore.dataset as ds

class Net(nn.Cell):

def __init__(self):

super(Net, self).__init__()

self.fc = nn.Dense(2, 2)

def construct(self, x):

return self.fc(x)

def generator():

for i in range(2):

yield (np.ones([2, 2]).astype(np.float32), np.ones([2]).astype(np.int32))

def train(net):

optimizer = nn.Momentum(net.trainable_params(), 1, 0.9)

loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True)

data = ds.GeneratorDataset(generator, ["data", "label"])

model = ms.Model(net, loss, optimizer)

model.train(1, data)

if __name__ == '__main__':

ms.set_context(mode=ms.PYNATIVE_MODE, device_target="Ascend")

# Init Profiler

# Note that the Profiler should be initialized before model.train

profiler = ms.Profiler(output_path = './profiler_data')

# Train Model

net = Net()

train(net)

# Profiler end

profiler.analyse()

Launch MindInsight

The MindInsight launch command can refer to MindInsight Commands.

Training Performance

Users can access the Training Performance by selecting a specific training from the training list, and click the performance profiling link.

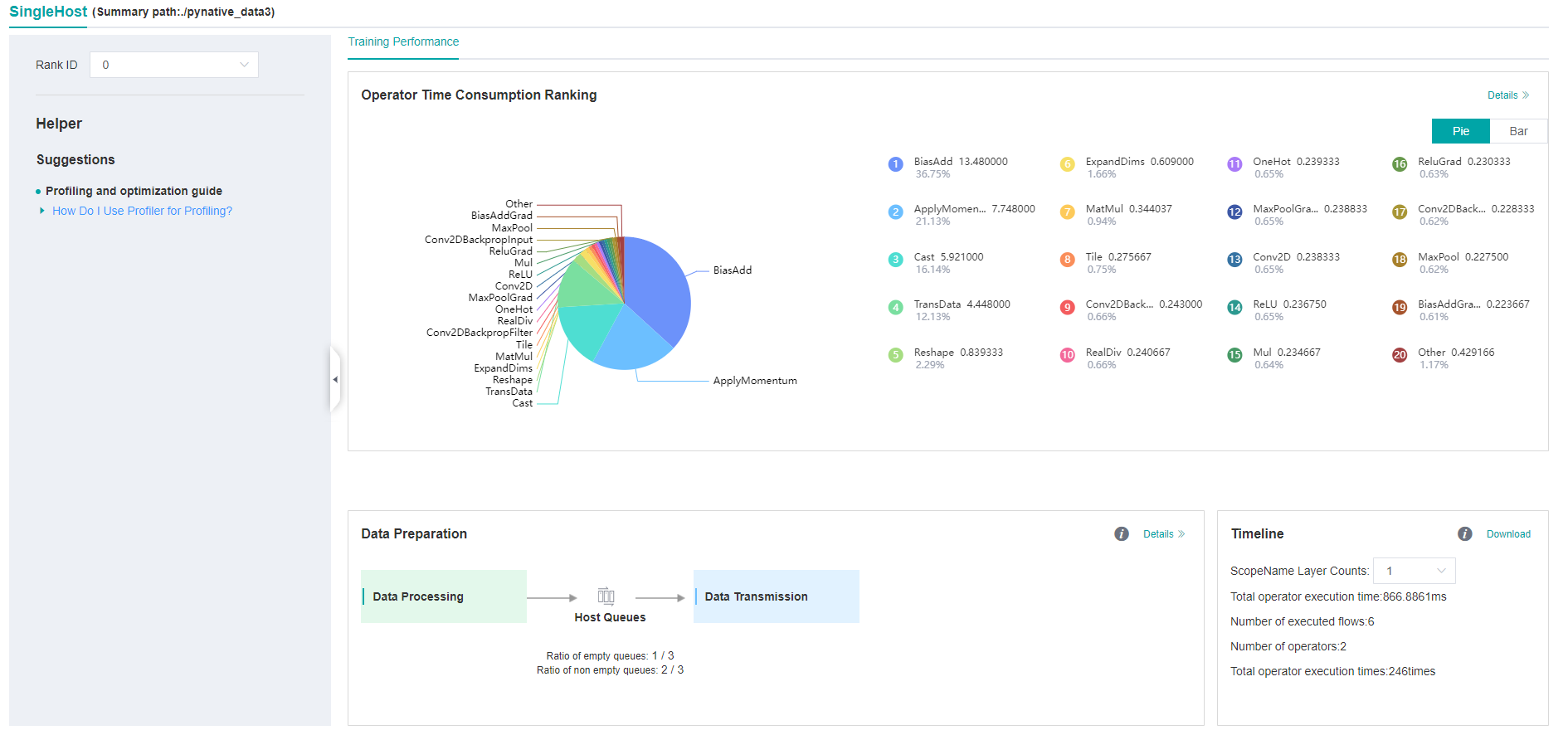

Figure:Overall Performance

Figure above displays the overall performance of the training, including the Operator Performance, Data Preparation Performance and Timeline. The data shown in these components include:

Operator Performance: It will collect the execution time of operators and operator types. The overall performance page will show the pie graph for different operator types.

Data Preparation Performance: It will analyse the performance of the data input stages. The overall performance page will show the number of steps that may be the bottleneck for these stages.

Timeline: It will collect execution time for stream tasks on the devices. The tasks will be shown on the time axis. The overall performance page will show the statistics for streams and tasks.

Users can click the detail link to see the details of each components. Besides, MindInsight Profiler will try to analyse the performance data, the assistant on the left will show performance tuning suggestions for this training.

Operator Performance Analysis

The operator performance analysis component is used to display the execution time of the operators(ASCEND/HOSTCPU) during MindSpore run.

ASCEND:The operator executed on Ascend. the Ascend operator does not distinguish between aicpu and aicore in pynative mode.

HOSTCPU:The host side CPU is mainly responsible for distributing the graph or operator to Ascend chip, and the operator can also be developed on the host side CPU according to the actual needs. The HOSTCPU operator refers to the operator running on the host side CPU.

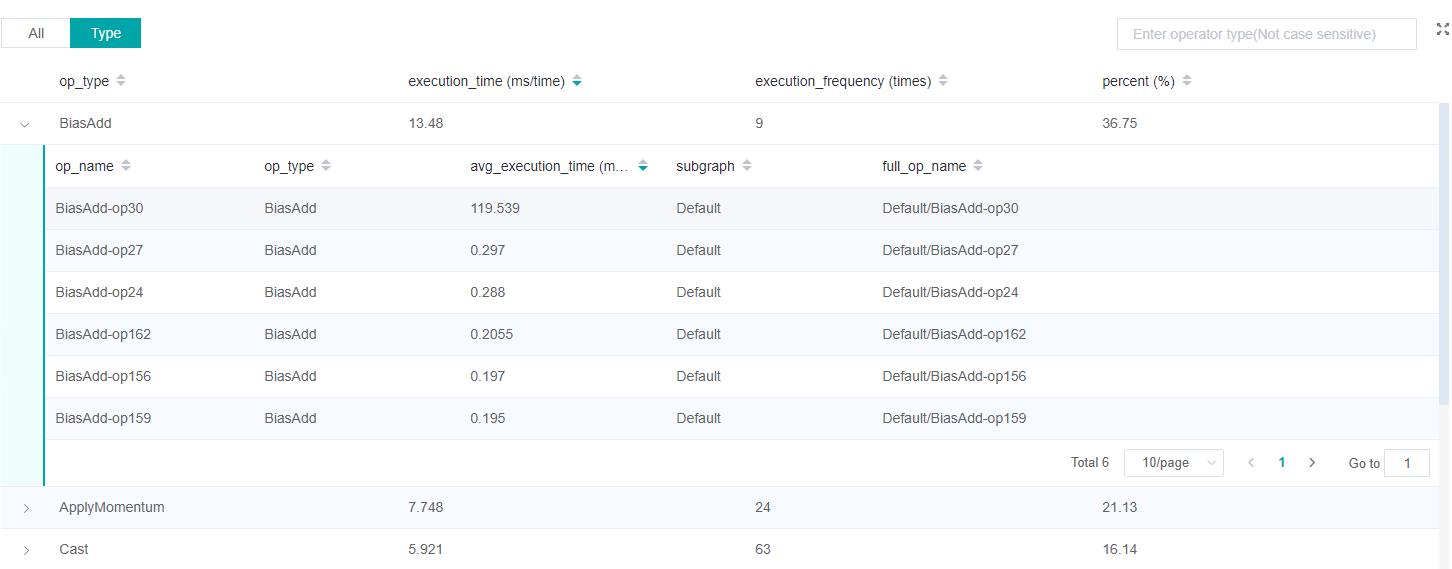

Figure:Statistics for Operator Types

Figure above displays the statistics for the operator types, including:

Choose pie or bar graph to show the proportion time occupied by each operator type. The time of one operator type is calculated by accumulating the execution time of operators belonging to this type.

Display top 20 operator types with the longest execution time, show the proportion and execution time (ms) of each operator type.

Figure:Statistics for Operators

Figure above displays the statistics table for the operators, including:

Choose All: Display statistics for the operators, including operator name, type, execution time, subgraph, full name. The table will be sorted by execution time by default.

Choose Type: Display statistics for the operator types, including operator type name, execution time, execution frequency and proportion of total time. Users can click on each line, querying for all the operators belonging to this type.

Search: There is a search box on the right, which can support fuzzy search for operators/operator types.

Data Preparation Performance Analysis

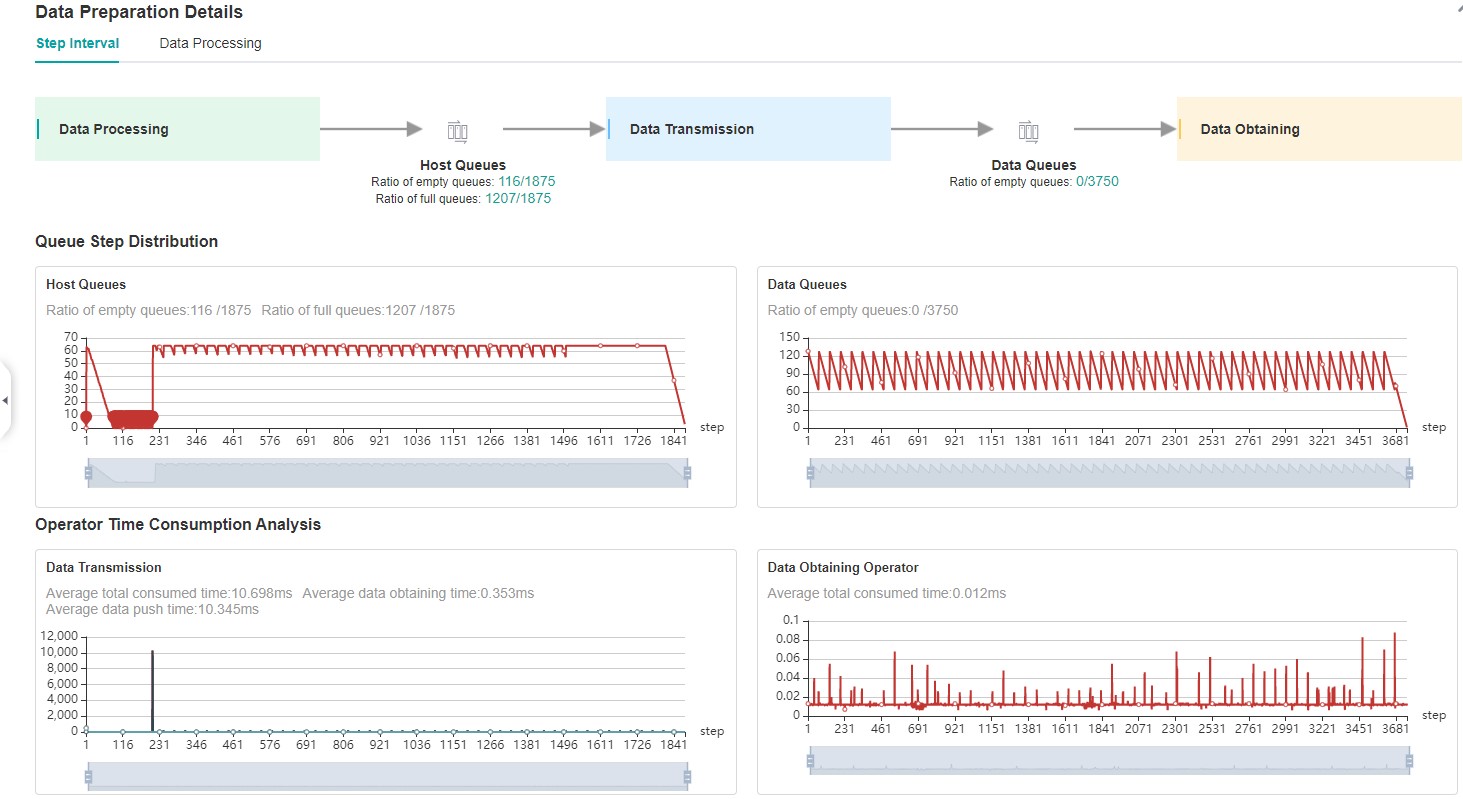

Figure:Data Preparation Performance Analysis

Figure above displays the page of data preparation performance analysis component. It consists of two tabs: the step gap and the data process.

The step gap page is used to analyse whether there is performance bottleneck in the three stages. We can get our conclusion from the data queue graphs:

The data queue size stands for the queue length when the training fetches data from the queue on the device. If the data queue size is 0, the training will wait until there is data in the queue; If the data queue size is greater than 0, the training can get data very quickly, and it means data preparation stage is not the bottleneck for this training step.

The host queue size can be used to infer the speed of data process and data transfer. If the host queue size is 0, it means we need to speed up the data process stage.

If the size of the host queue is always large and the size of the data queue is continuously small, there may be a performance bottleneck in data transfer.

Note

The queue size is the value recorded when fetching data, and obtaining the data of host queue and data queue is executed asynchronously, so the number of host queue steps, data queue steps, and user training steps may be different.

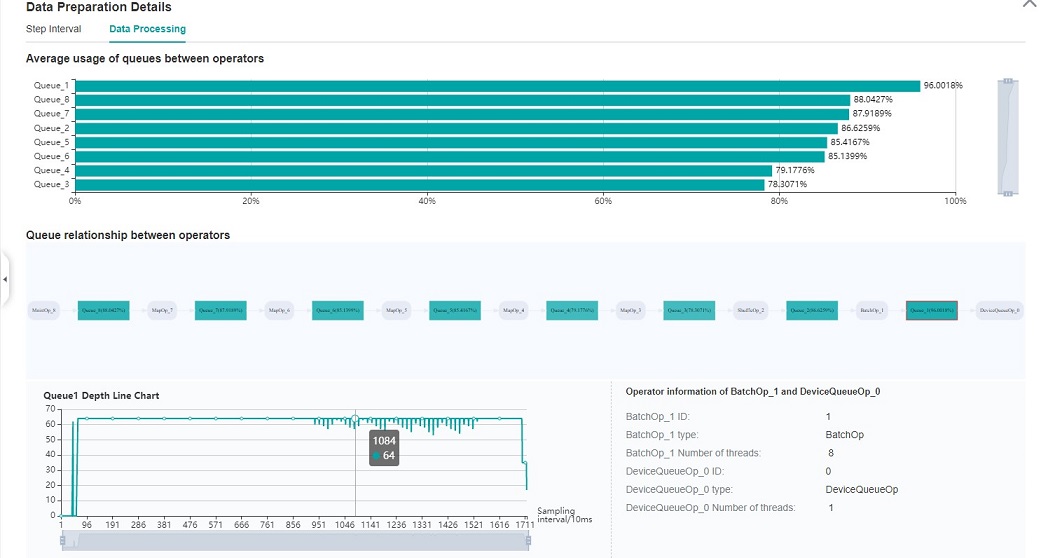

Figure:Data Process Pipeline Analysis

Figure above displays the page of data process pipeline analysis. The data queues are used to exchange data between the data processing operators. The data size of the queues reflect the data consume speed of the operators, and can be used to infer the bottleneck operator. The queue usage percentage stands for the average value of data size in queue divide data queue maximum size, the higher the usage percentage, the more data that is accumulated in the queue. The graph at the bottom of the page shows the data processing pipeline operators with the data queues, the user can click one queue to see how the data size changes according to the time, and the operators connected to the queue. The data process pipeline can be analysed as follows:

When the input queue usage percentage of one operator is high, and the output queue usage percentage is low, the operator may be the bottleneck.

For the leftmost operator, if the usage percentage of all the queues on the right are low, the operator may be the bottleneck.

For the rightmost operator, if the usage percentage of all the queues on the left are high, the operator may be the bottleneck.

To optimize the performance of data processing operators, there are some suggestions:

If the Dataset Operator is the bottleneck, try to increase the

num_parallel_workers.If a GeneratorOp type operator is the bottleneck, try to increase the

num_parallel_workersand replace the operator toMindRecordDataset.If a MapOp type operator is the bottleneck, try to increase the

num_parallel_workers. If it is a python operator, try to optimize the training script.If a BatchOp type operator is the bottleneck, try to adjust the size of

prefetch_size.

Timeline Analysis

The Timeline component can display:

The operators (ASCEND/HOSTCPU operators) are executed on which device.

The MindSpore thread split strategy for this neural network.

The execution sequence and execution time of the operator on the device.

The step number of training (Only supports dataset sinking mode.).

Scope Nameof the operator, the number of each operator’sScope Namecould be selected and download corresponding timeline file. For example, the full name of one operator isDefault/network/lenet5/Conv2D-op11, thus the firstScope Nameof this operator isDefault, the secondScope Nameisnetwork. If twoScope Namefor each operator is selected, then theDefaultandnetworkwill be displayed.

By analyzing the timeline, users can locate an operator and view and analyze its execution time.

Click the download button on the overall performance page to

view Timeline details. The Timeline data file (json format) will be

stored on local machine, and can be displayed by tools. We suggest to

use chrome://tracing or

Perfetto to visualize the

Timeline.

Chrome tracing: Click “load” on the upper left to load the file.

Perfetto: Click “Open trace file” on the left to load the file.

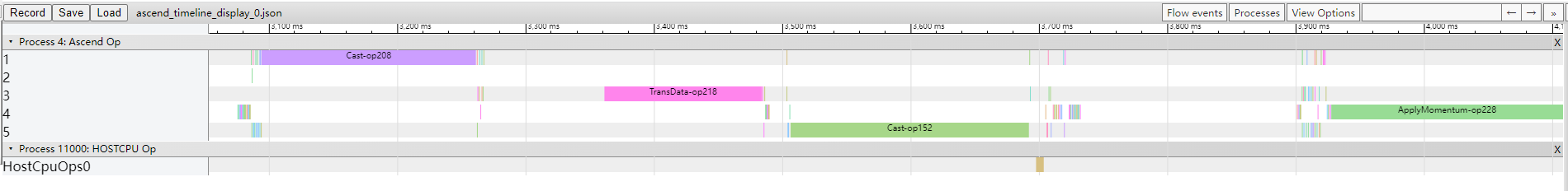

Figure:Timeline Analysis

The Timeline consists of the following parts:

Device and Stream List: It will show the stream list on each device. Each stream consists of a series of tasks. One rectangle stands for one task, and the area stands for the execution time of the task. Each color block represents the starting time and length of operator execution. The detailed explanation of timeline is as follows:

Step: the number of training steps.

Ascend Op: contains the timeline of operators executed on the Ascend.

HOSTCPU Op: contains the timeline of operators executed on the HOSTCPU.

The Operator Information: When we click one task, the corresponding operator of this task will be shown at the bottom.

W/A/S/D can be applied to zoom in and out of the Timeline graph.

Specifications

To limit the data size generated by the Profiler, MindInsight suggests that for large neural network, the profiled steps should be less than 10.

Note

The number of steps can be controlled by controlling the size of training dataset. For example, the

num_samplesparameter inmindspore.dataset.MindDatasetcan control the size of the dataset. For details, please refer to: https://www.mindspore.cn/docs/en/r1.8/api_python/dataset/mindspore.dataset.MindDataset.htmlThe parse of Timeline data is time consuming, and usually the data of a few steps is enough to analyze the results. In order to speed up the data parse and UI display, Profiler will show at most 20M data (Contain 10+ step information for large networks).

Notices

Currently the training and inference process does not support performance debugging, only individual training or inference is supported.

Ascend performance debugging does not support dynamic Shape scenarios, multi-subgraph scenarios, and control flow scenarios.