mindspore.nn.LeakyReLU

- class mindspore.nn.LeakyReLU(alpha=0.2)[source]

Leaky ReLU activation function.

The activation function is defined as:

\[\text{leaky_relu}(x) = \begin{cases}x, &\text{if } x \geq 0; \cr {\alpha} * x, &\text{otherwise.}\end{cases}\]where \(\alpha\) represents the alpha parameter.

For more details, see Rectifier Nonlinearities Improve Neural Network Acoustic Models.

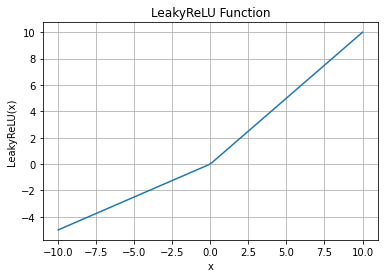

LeakyReLU Activation Function Graph:

- Inputs:

x (Tensor) - The input of LeakyReLU is a Tensor of any dimension.

- Outputs:

Tensor, has the same type and shape as the x.

- Raises

TypeError – If alpha is not a float or an int.

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> from mindspore import Tensor, nn >>> import numpy as np >>> x = Tensor(np.array([[-1.0, 4.0, -8.0], [2.0, -5.0, 9.0]]), mindspore.float32) >>> leaky_relu = nn.LeakyReLU() >>> output = leaky_relu(x) >>> print(output) [[-0.2 4. -1.6] [ 2. -1. 9. ]]