mindspore.dataset.audio.TimeStretch

- class mindspore.dataset.audio.TimeStretch(hop_length=None, n_freq=201, fixed_rate=None)[source]

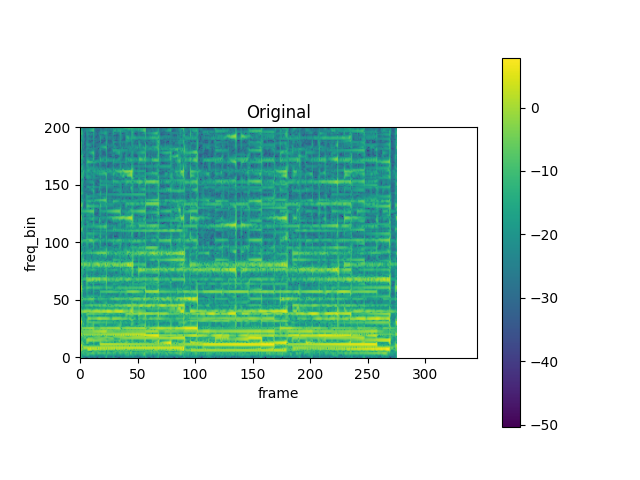

Stretch Short Time Fourier Transform (STFT) in time without modifying pitch for a given rate.

Note

The shape of the audio waveform to be processed needs to be <…, freq, time, complex=2>. The first dimension represents the real part while the second represents the imaginary.

- Parameters

hop_length (int, optional) – Length of hop between STFT windows, i.e. the number of samples between consecutive frames. Default:

None, will use n_freq - 1 .n_freq (int, optional) – Number of filter banks from STFT. Default:

201.fixed_rate (float, optional) – Rate to speed up or slow down by. Default:

None, will keep the original rate.

- Raises

TypeError – If hop_length is not of type int.

ValueError – If hop_length is not a positive number.

TypeError – If n_freq is not of type int.

ValueError – If n_freq is not a positive number.

TypeError – If fixed_rate is not of type float.

ValueError – If fixed_rate is not a positive number.

RuntimeError – If input tensor is not in shape of <…, freq, num_frame, complex=2>.

- Supported Platforms:

CPU

Examples

>>> import numpy as np >>> import mindspore.dataset as ds >>> import mindspore.dataset.audio as audio >>> >>> # Use the transform in dataset pipeline mode >>> waveform = np.random.random([5, 16, 8, 2]) # 5 samples >>> numpy_slices_dataset = ds.NumpySlicesDataset(data=waveform, column_names=["audio"]) >>> transforms = [audio.TimeStretch()] >>> numpy_slices_dataset = numpy_slices_dataset.map(operations=transforms, input_columns=["audio"]) >>> for item in numpy_slices_dataset.create_dict_iterator(num_epochs=1, output_numpy=True): ... print(item["audio"].shape, item["audio"].dtype) ... break (1, 16, 8, 2) float64 >>> >>> # Use the transform in eager mode >>> waveform = np.random.random([16, 8, 2]) # 1 sample >>> output = audio.TimeStretch()(waveform) >>> print(output.shape, output.dtype) (1, 16, 8, 2) float64

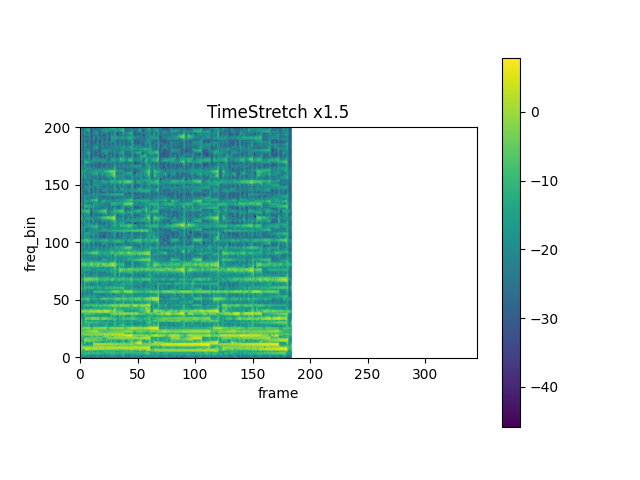

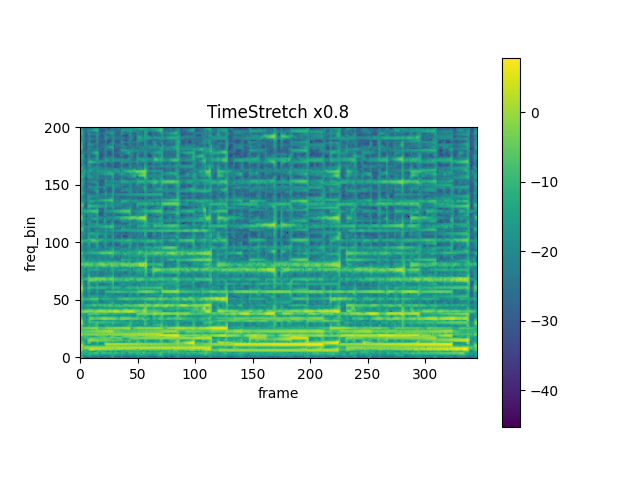

- Tutorial Examples: